Introduction [2]

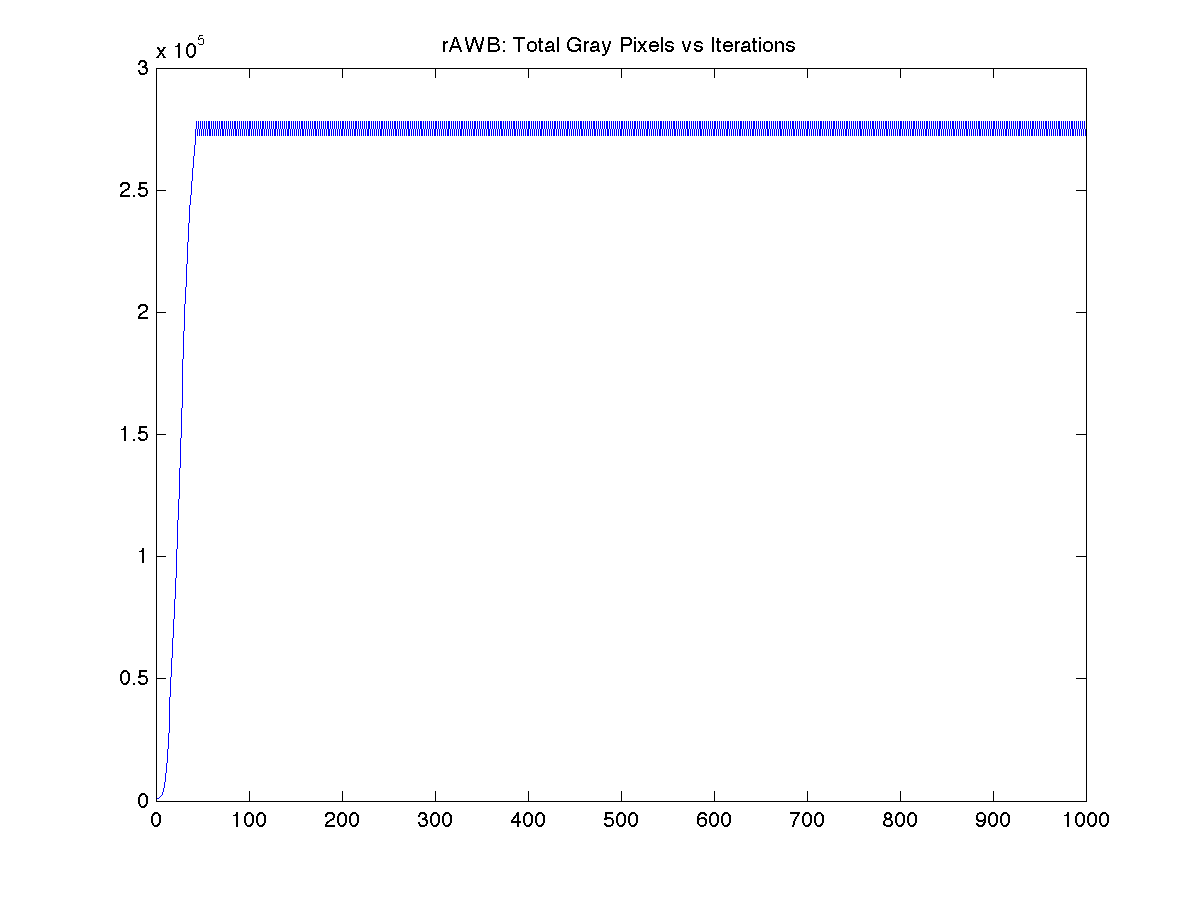

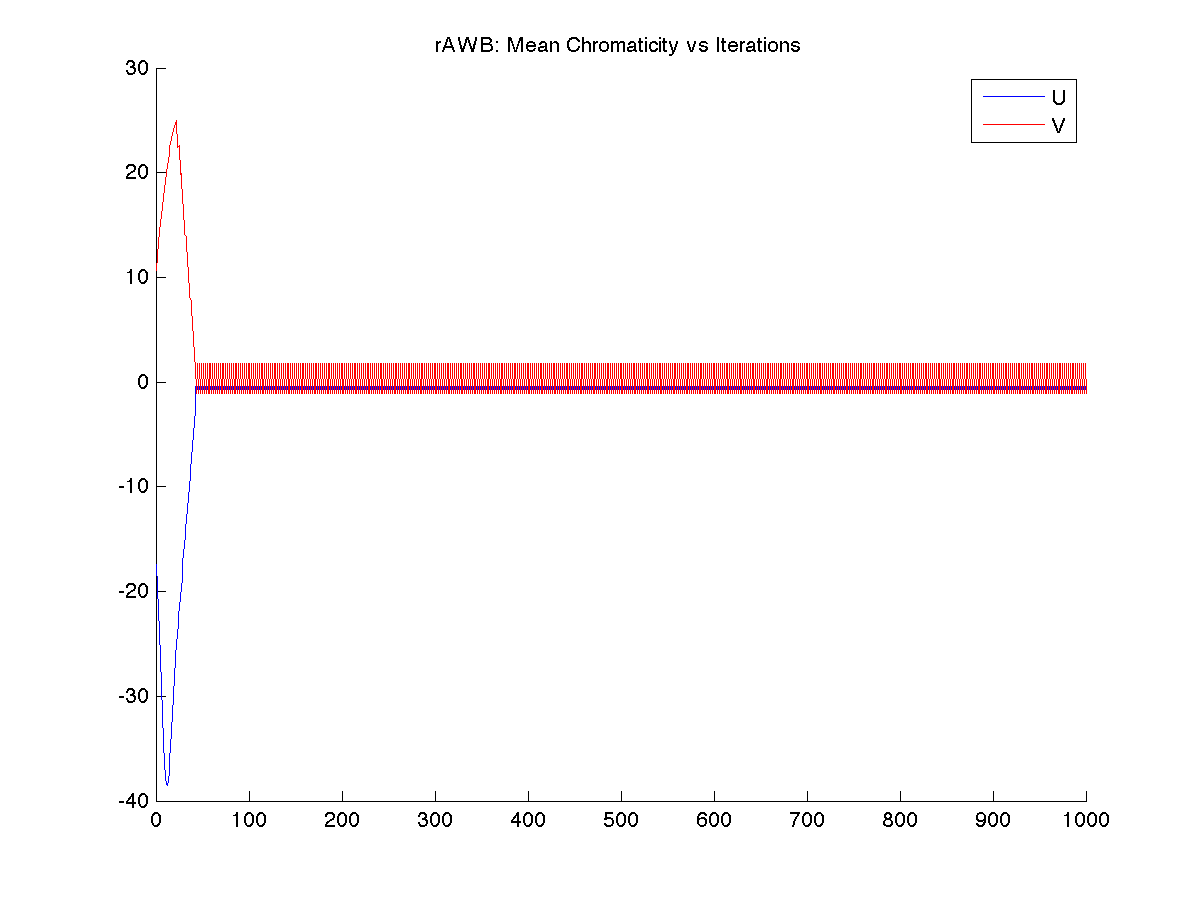

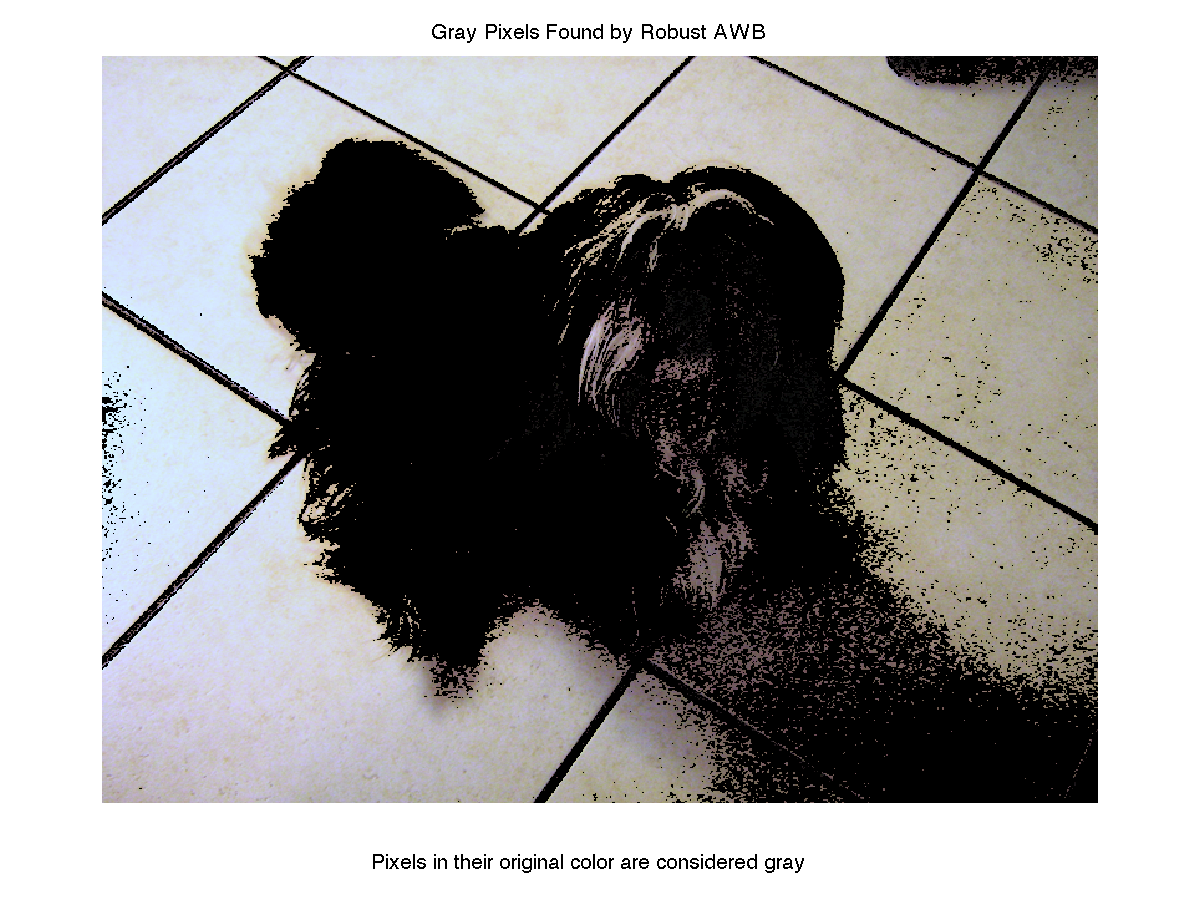

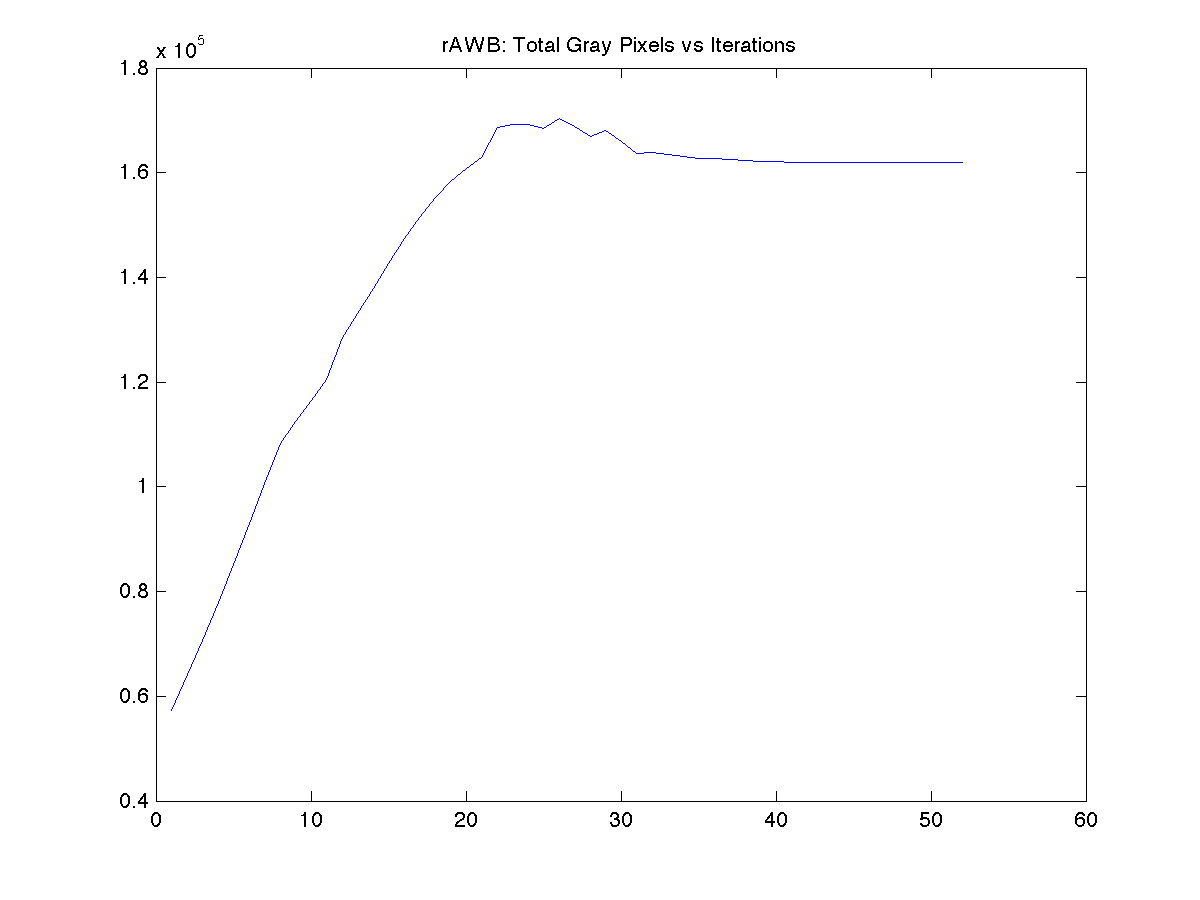

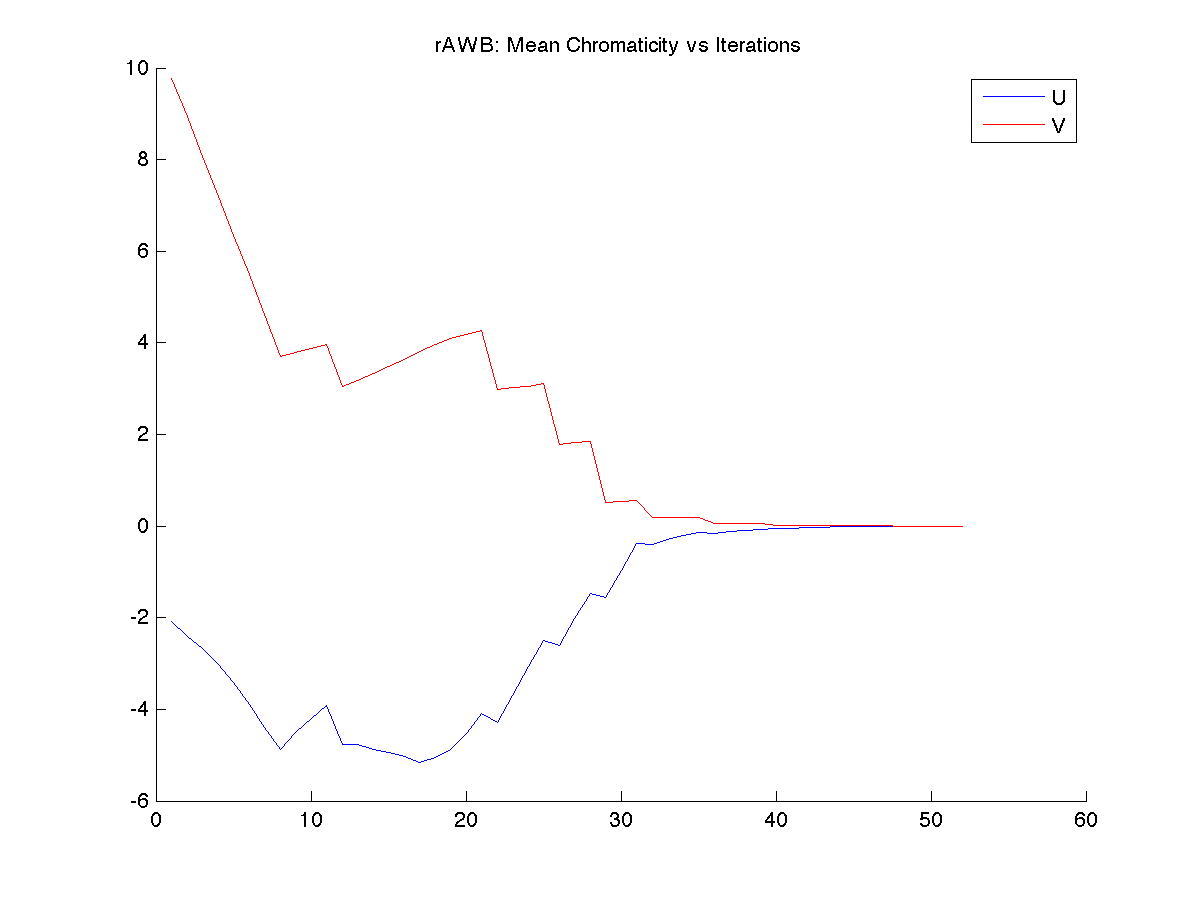

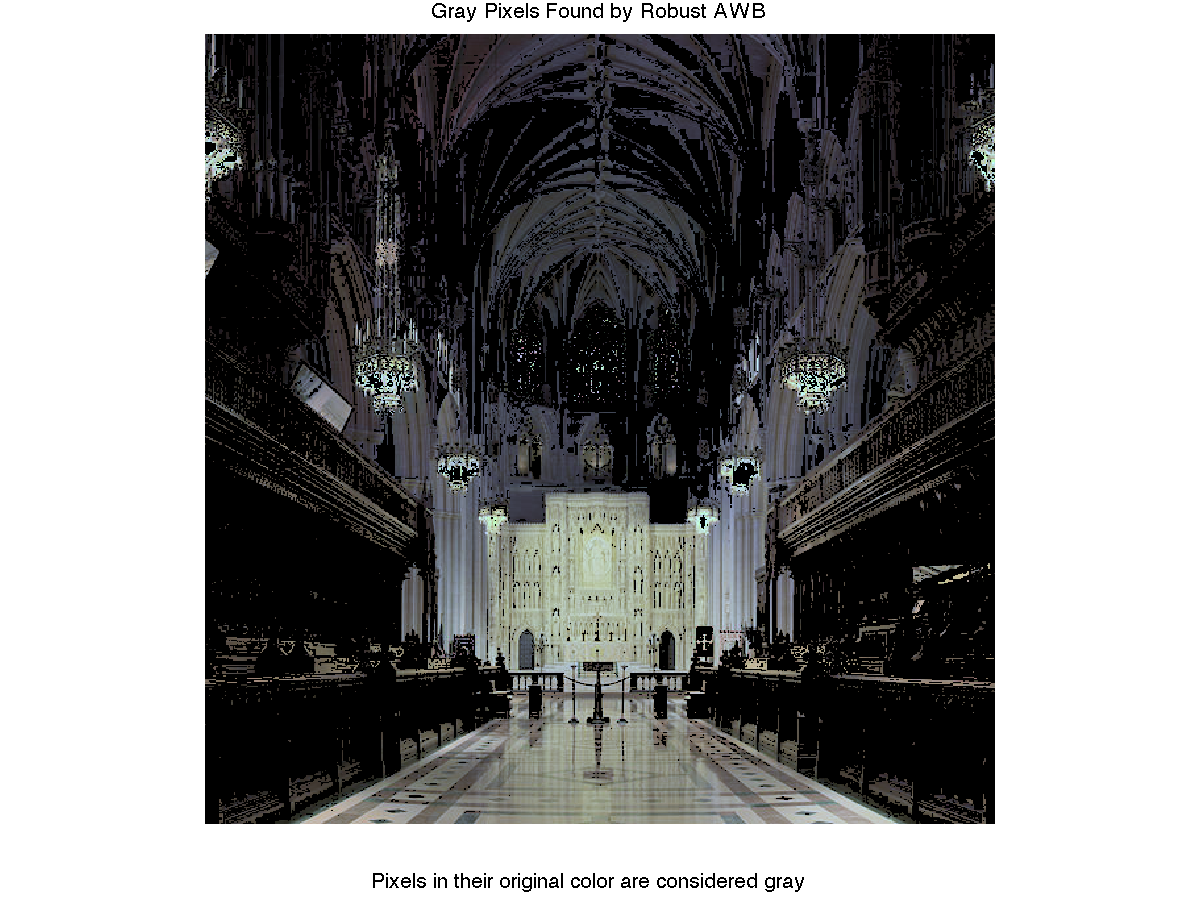

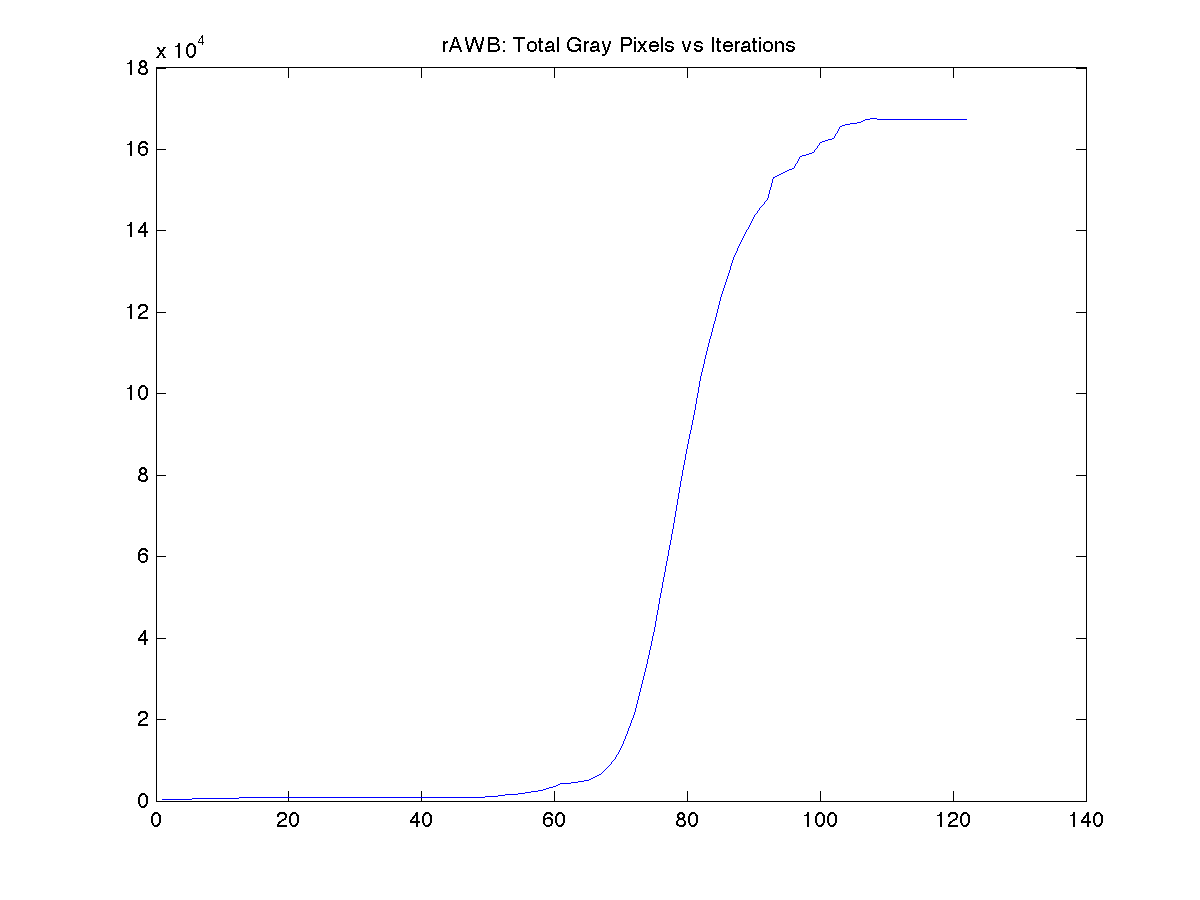

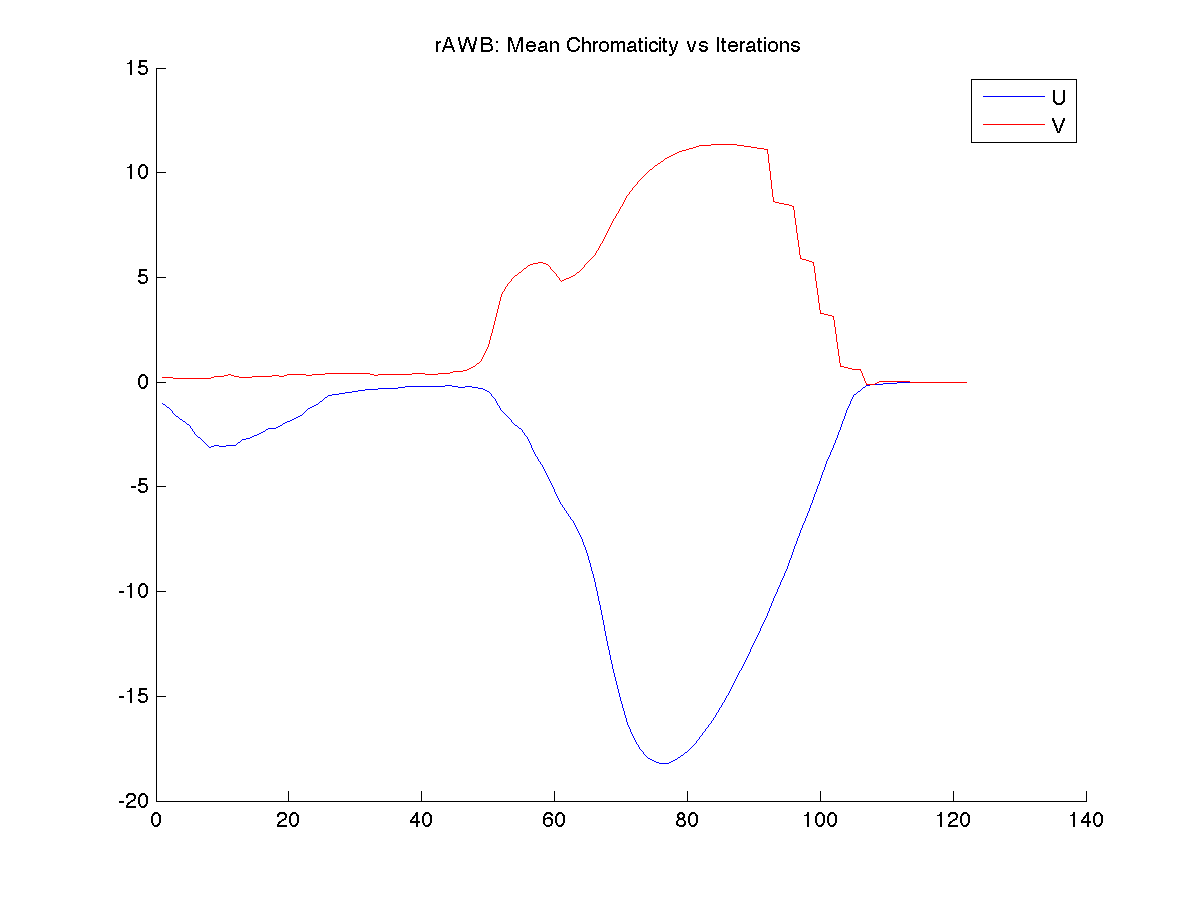

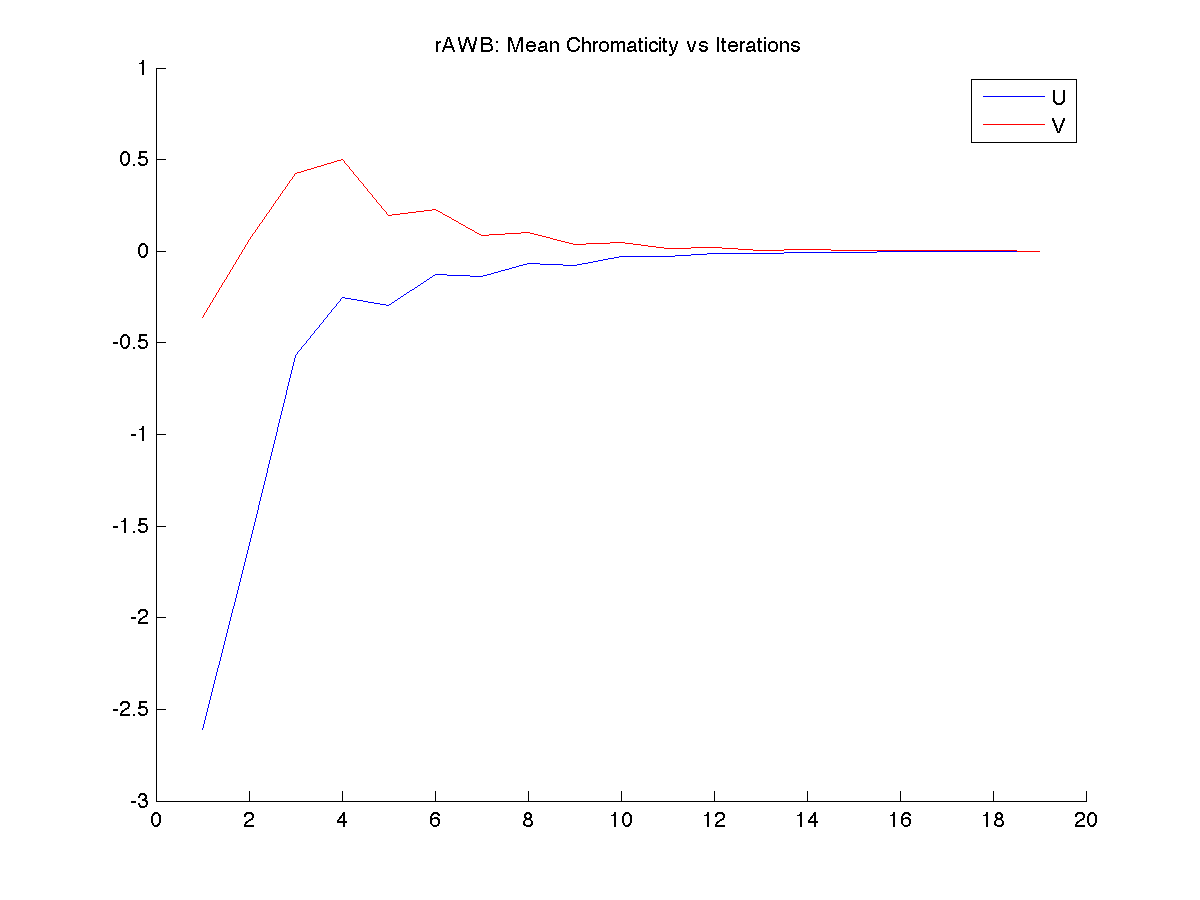

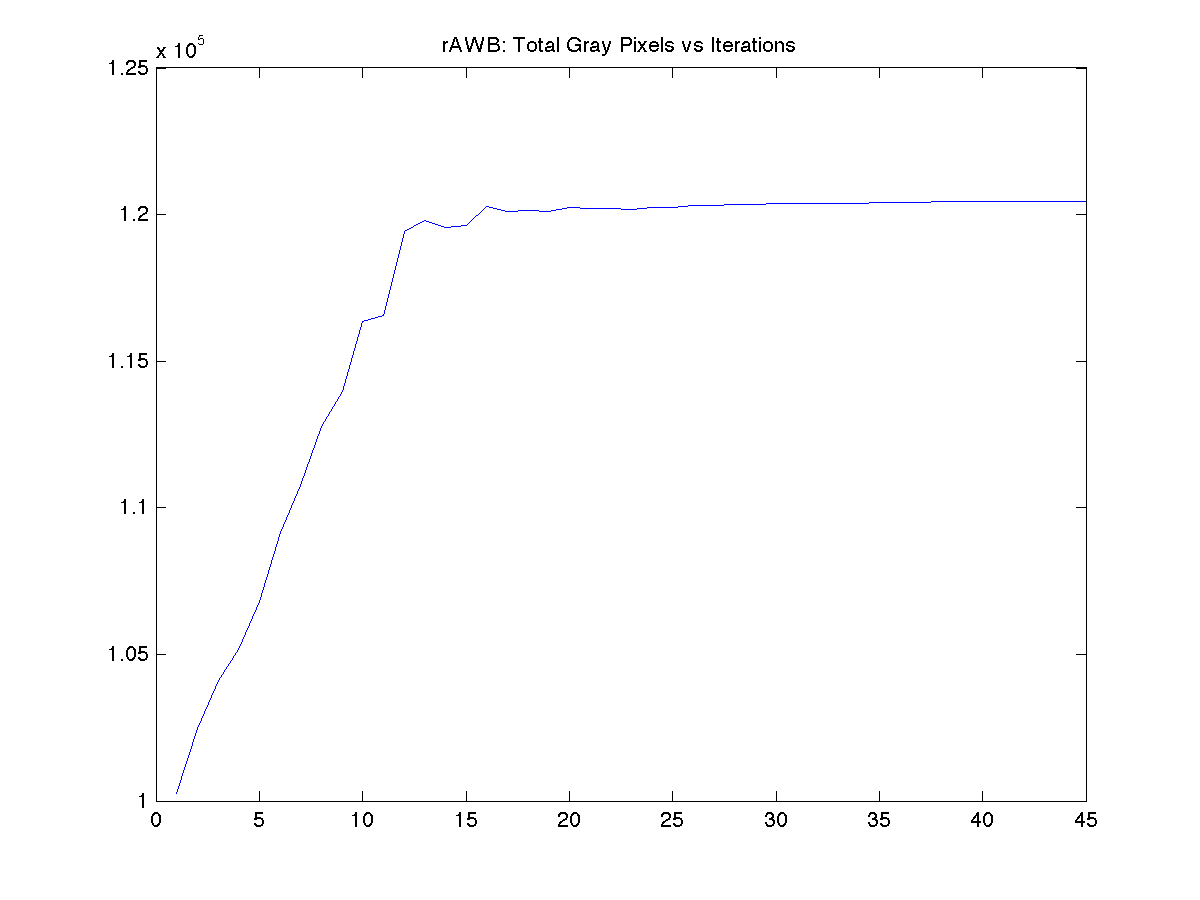

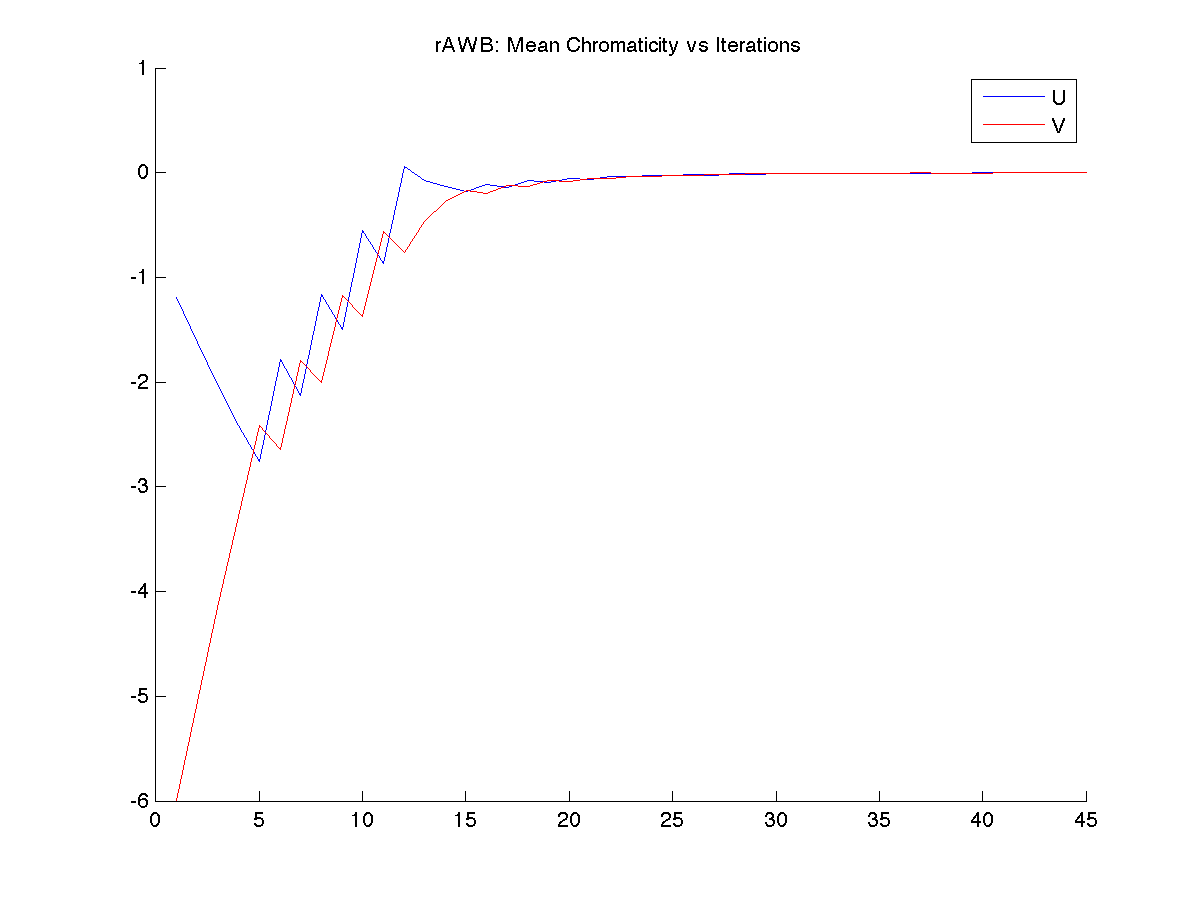

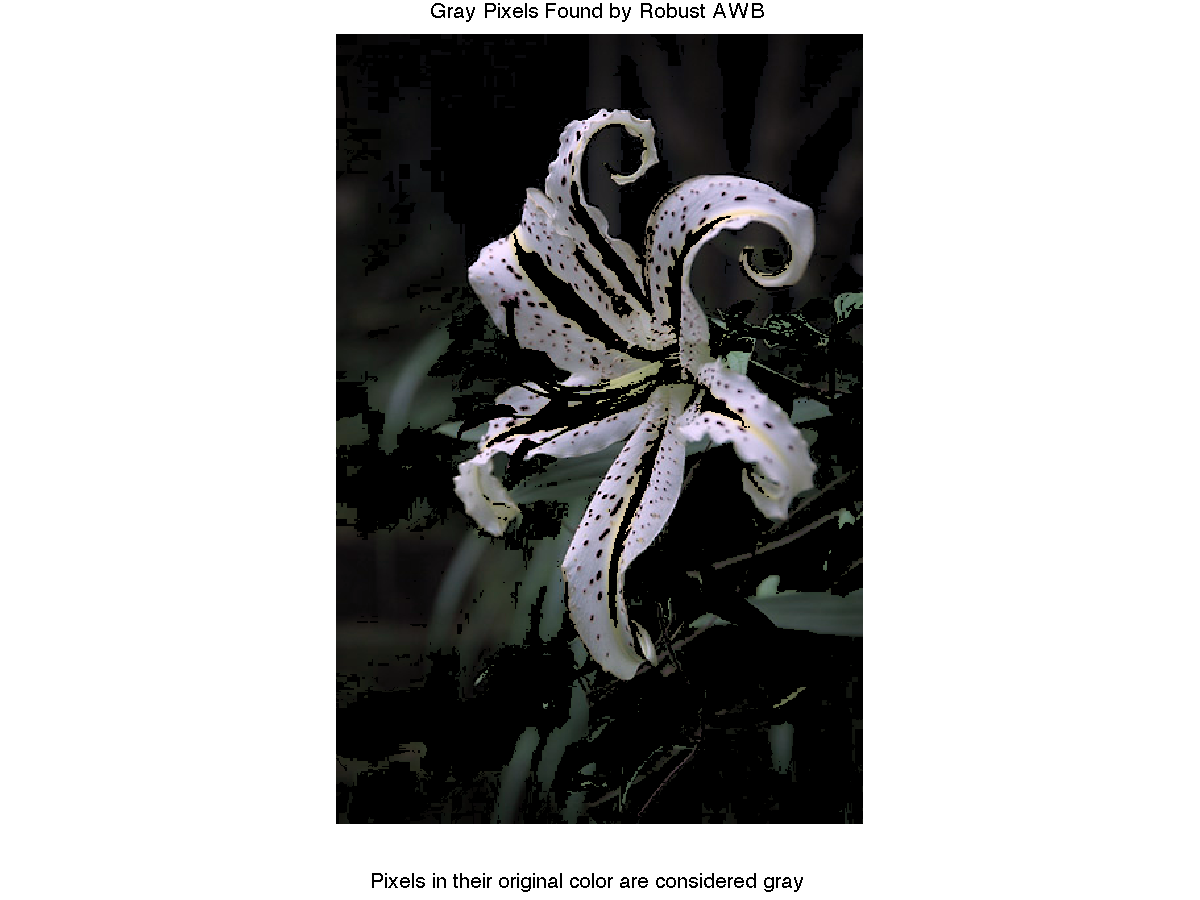

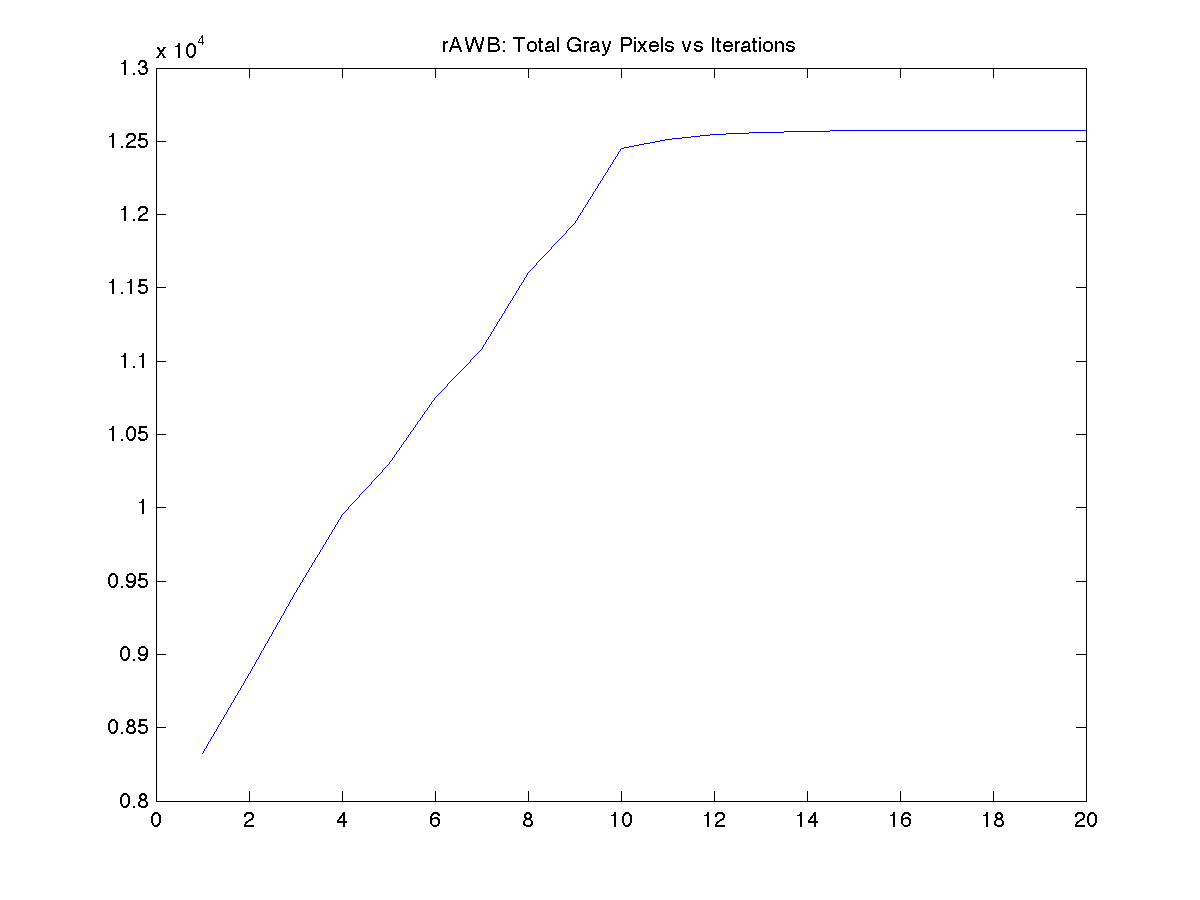

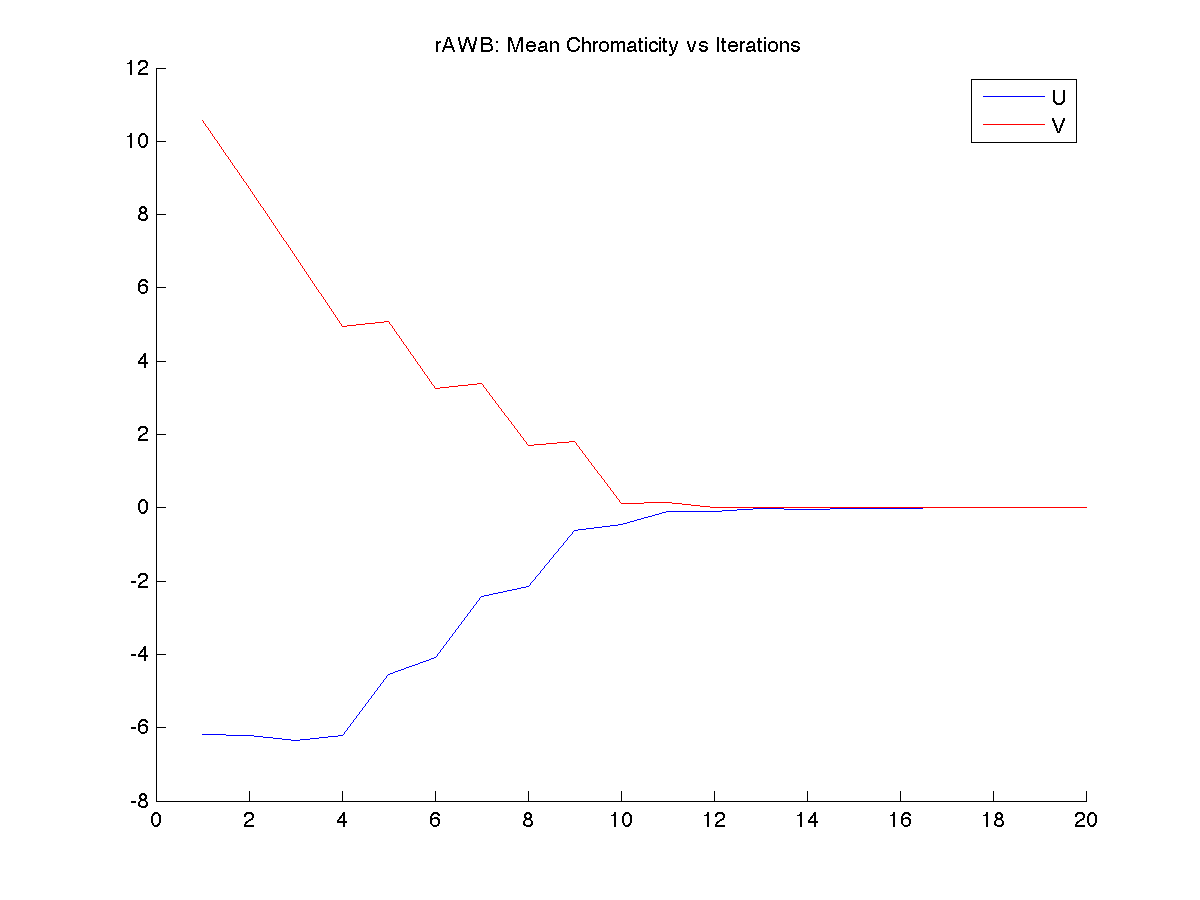

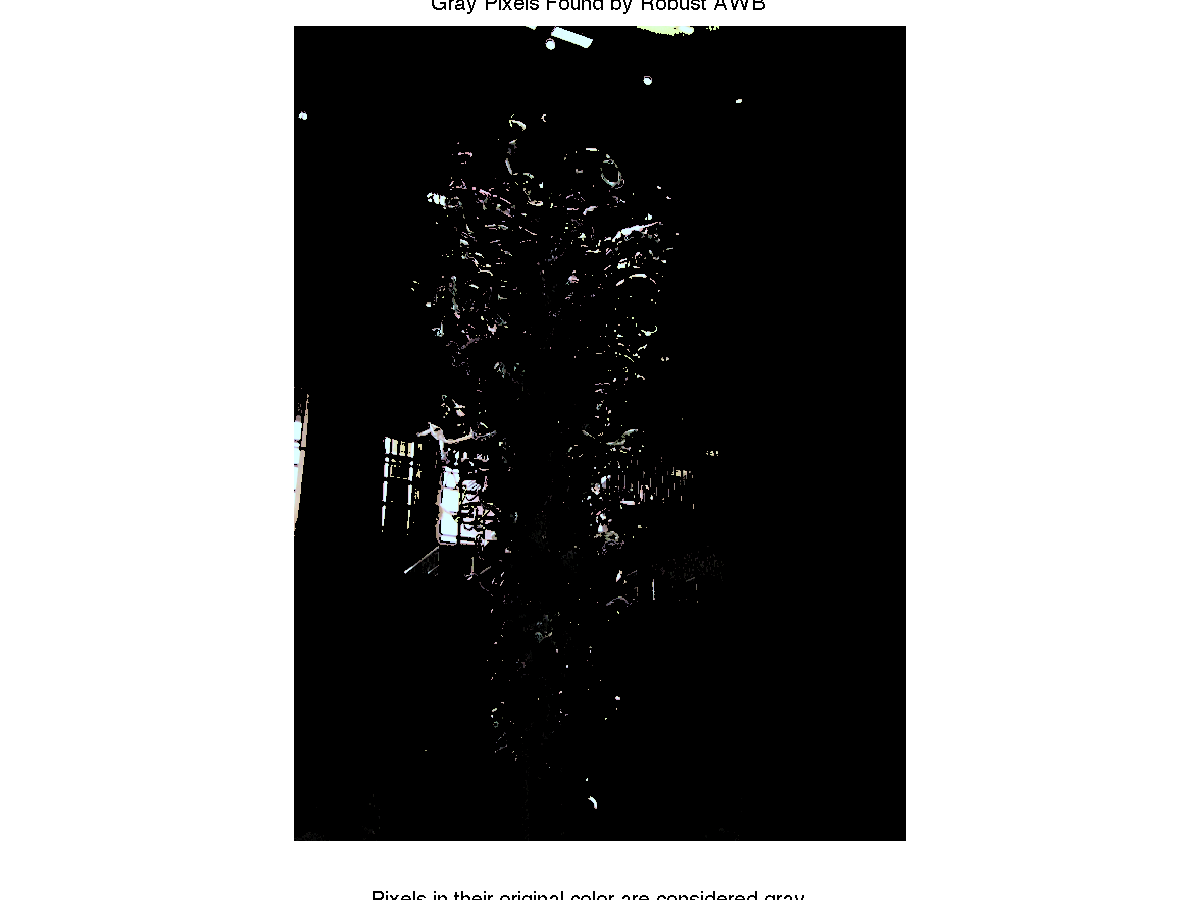

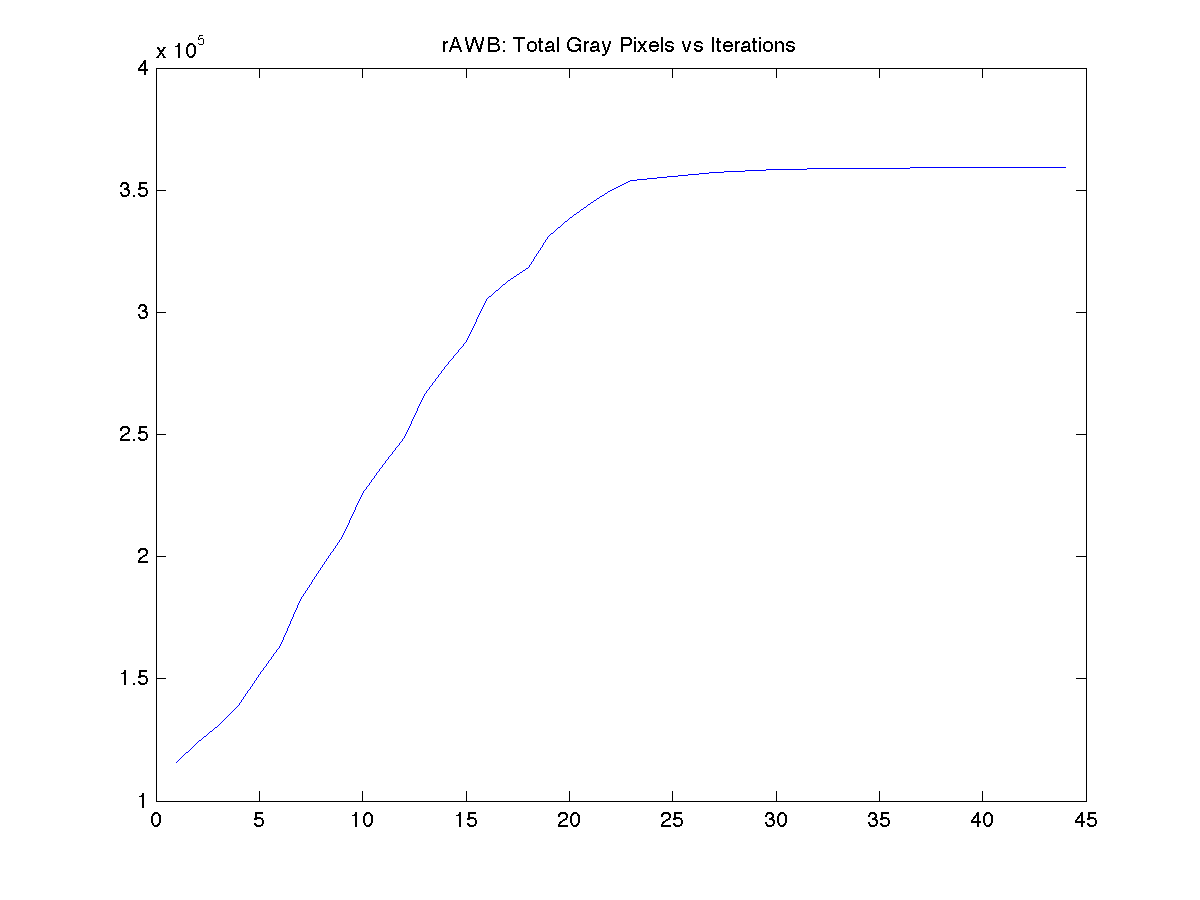

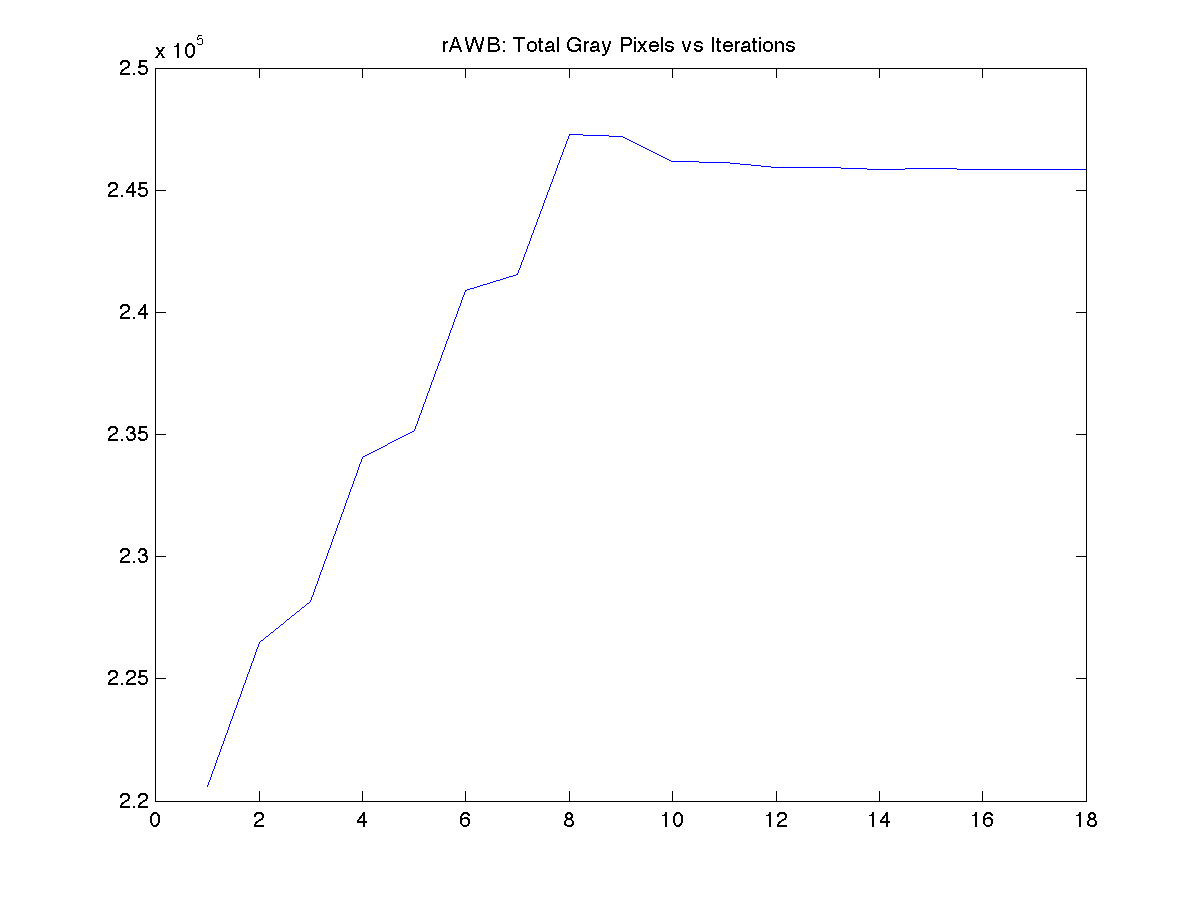

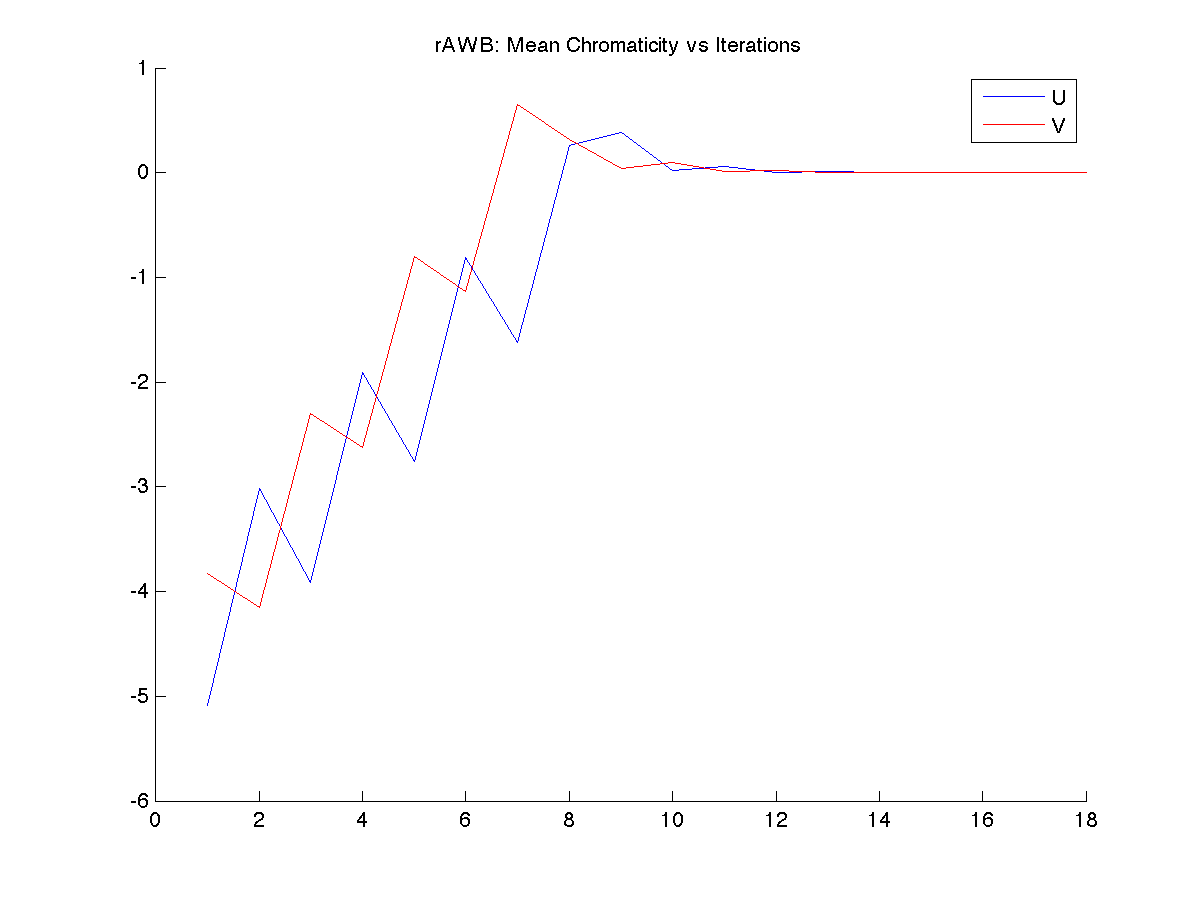

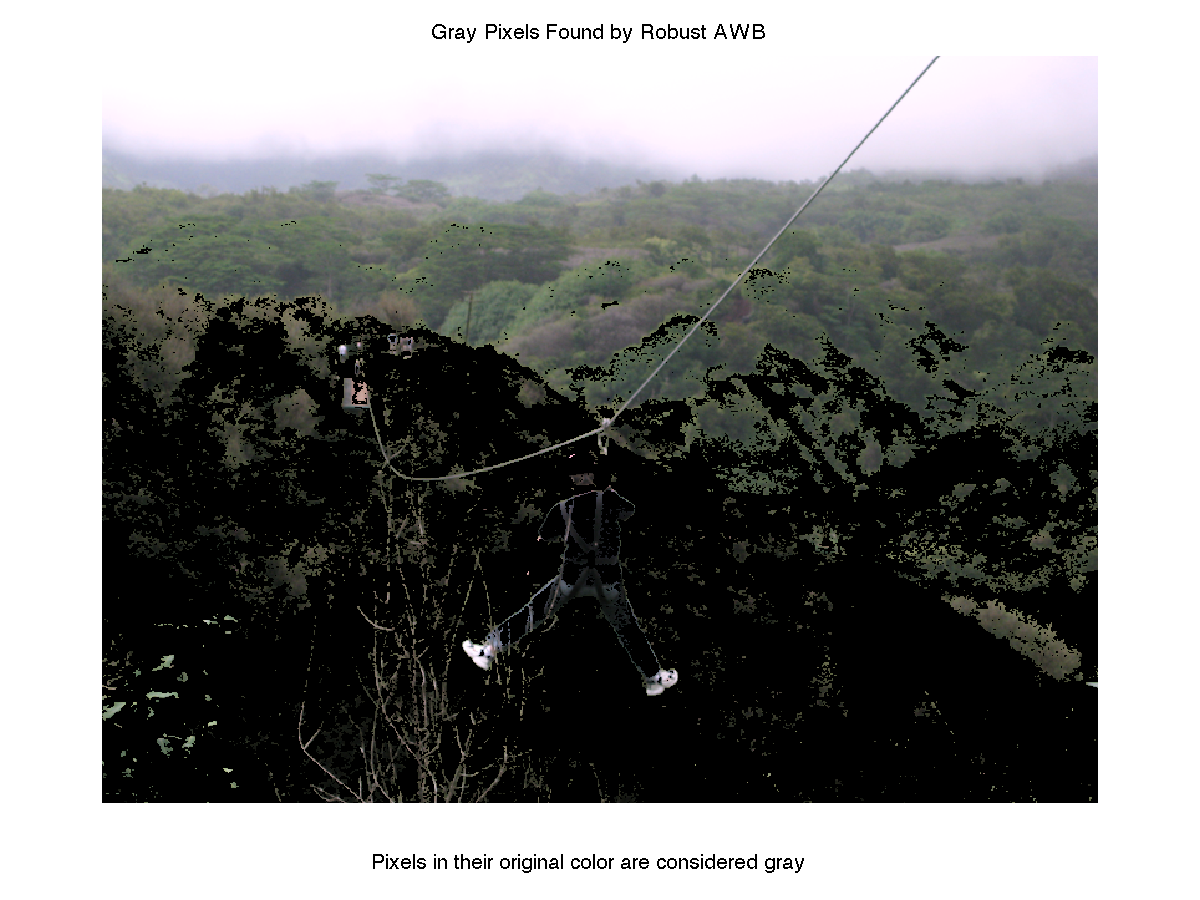

The technique presented by Huo et al. is essentially a more careful application of the gray world idea. Instead of averaging all the pixels in an image, the algorithm selects slightly off-gray candidates based on their YUV coordinates that are more likely to reveal information about the scene illuminant rather than the object's own color. The choice of the threshold for what is acceptably off-gray is a parameter that can be tweaked. Values around 0.3 are typical.

This method was designed for video cameras in mind where one would like to achieve color balance in a few frames. It is meant to be implemented in hardware where changing the gain of channel responsivities is easy. A negative feedback loop is used in the algorithm to try to drive the off-grays to complete neutral gray. Despite this, I have adapted it for photographs at the price of a longer processing time than the other algorithms I implemented.