Introduction

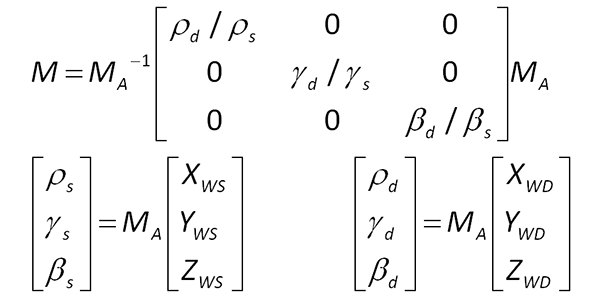

There are a variety of methods to achieve adaptation but I looked at the most standard model, called von Kries's model or the chromatic adaptation transform. The underlying principle is that in order to change the apparent illumination of a photo, we need to excite the same LMS cone responses in the eye as with our desired illuminant. Typically, we assume that this is possible with a diagonal scaling of the axes after a transformation from XYZ to a space that more resembles the LMS cone space. In other words, once we're in the right space, we simply need to divide out our estimated illuminant and apply our desired illuminant separately for each channel. In matrix form this is represented as:

The choice of the transformation matrix, MA, is the subject of research [1]. It can be optimized to a variety of criteria, such as mean ΔEab or statistical distribution testing [5]. In any case, the goal here is to implement these different transformations and to gain some intuition about their performance.

Our desired lighting is the D65 CIE standard illuminant, which represents spectrum of the sun at mid-day. This is the white point for the sRGB color space that most monitors are calibrated for.

The available transforms are:- von Kries

- Bradford

- Sharp - based on sharpened sensors, min. XYZ errors

- CMCCAT2000 - fitted from all available color data sets

- CAT02 - optimized for minimizing CIELAB differences

- XYZ