Introduction

1. What are Mosaic Images?

A color image requires at least three color samples at each pixel location. Therefore, theoretically, a digital camera would need 3 different sensors to completely measure an image. However, using 3 different sensors to measure an image is a difficult task. First, using multiple sensors to detect different parts of the visible spectrum requires splitting the light entering the camera so that the scene is imaged onto each sensor. Then, precise registration is then needed to align the three images.[3]

To simplify the image capturing process, many cameras use a single sensor array with a color filter array. These sensor can only detect the intensity but not the frequency of incoming light. However, since each color filter in the color filter array only allows part of the spectrum to pass though (commonly Red, Green or Blue), each sensor only captures 1 color. The resulting image will be a mosaic image having a pattern determined by the color filter array.

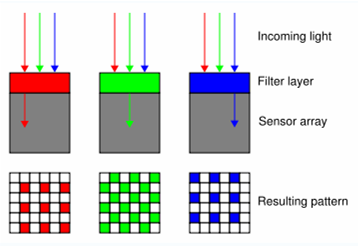

In Figure. 1, incoming light is filtered by color filters on top of sensors, and only 1 color is allowed to pass through. As a result, a mosaic image is formed.

Figure 1. Diagram showing formation of Mosaic Image

[image from Wikipedia]

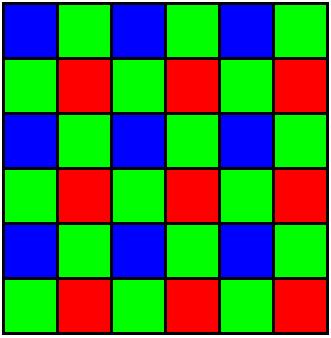

There are several color filter array patterns. The most common one is the Bayer Pattern [4] as shown in Figure 2 below.

Figure 2. Bayer Pattern

In the Bayer pattern, green samples are arranged in a checkerboard pattern, and the red and blue samples are arranged in rectangular grid pattern. The density the green samples are twice that of the red and blue ones. The reason for why there are more green samples than red or blue samples is that the human visual system is more sensitive to luminance rather than chrominance. Luminance contains important spatial information, and we would like to preserve as much spatial detail as possible during the process. We can think of the green channel as the luminance record because the spectral response of a green filter looks like the spectral response of the eye’s luminance channel. Therefore, by having more green samples, we can preserve more luminance information.

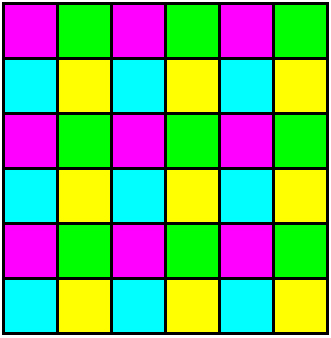

Another filter array pattern is the CYGM pattern, where there are equal number of 4 color filters : Cyan, Yellow, Green and Magenta. The CYGM filter array pattern is shown in Figure 3.

Figure 3. CYGM filter array

Figure 4 shows an example of a Bayer Pattern mosaic image

Figure 4. Example of a Bayer Pattern Mosaic Image

2. Demosaicking

All color images have 3 color values per pixel. For example, each pixel in a RGB images have a R, G abd B value. However, in a mosaic image, each pixle is only has 1 color value. Therefore, to create the complete color image, the missing 2 color values in each pixel have to be estimated from the existing mosaic data. This process of interpolating color values in the mosaic image to create the complete color image is called demosaic.

How well the demosaicking algorithm in a camera performs is the key to the visual quality of the digital camera. Many different interpolation techniques have been suggested to estimate the 2 missing color values in each pixel. Gunturk et al. [3] categorized demosaicking algorithms into 3 groups :

1. Heuristic approaches.

These algorithms are mostly filtering operations that might be adaptive, non-adaptive, or might exploit color channel correlations. They do not try to solve a mathematically defined optimization problem.

2. Algorithms that perform demosaicking using a reconstruction approach.

These algorithms make some assumptions about the inter-channel correlation or the prior image, and solves a mathematical problem based on those assumptions.

3. Generalization that uses the spectral filtering model in restoration.

This group formulates the demosaicking problem as an inverse problem. Algorithms use models of the image formation process and account for the transformations performed by the color filters, lens distortions, sensor noise, etc. They then determine the most likely output image given the measured CFA image.