Demonstrations

We show the workings of the algorithm in 4 different settings.

Reconstruction of images from multiple frames always involves some form of image registration. Image registration on arbitrary images however is no trivial task. We want to show in our first two cases, that the problem becomes much easier if exact information on the image sensor (the camera) is available.Given an image scene, we simulate the movement of a camera capturing a series of images of the scene. The low resolution images captured, are partially overlapping and thus can be fused to a high resolution image of the total scene. Since the camera parameters are known, the relative placement of the images is determined. The camera performs only translations and the distance from the scene surface is assumed to be fixed. In this trivial case we have perfect registration and assume no blur. This is not an adhoc simplification, instead it is realistic in the setting of pure computer graphics, were images are generated and not acquired.

The camera scans the scene in a random way, while generating enough

overlapping frames. As can be seen, each frame captures only a small area

of the image.

The downsampling factor is 4x, so the image data is rather sparse.

All the more impressive is the reconstruction, although a large number

of frames is necessary.

The matlab code can display the sequence of images in the form of a

short movie.

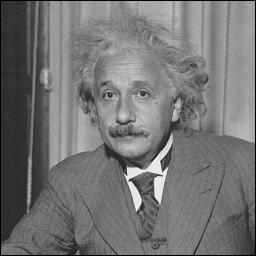

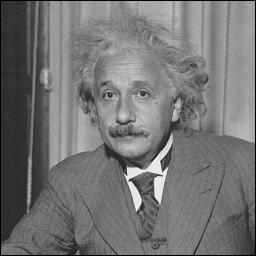

The original image, our well known Albert. A single frame captured by

the camera.

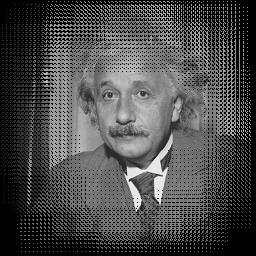

Reconstructed image after the first frame. Reconstructed

image after 100 frames.

In case two we extend our model to real world image acquisition. Any

image formation process introduces blur due to the characteristics of the

optical system and the image sensor (characterized by the Point-Spread-Function).

If not known more precisly, the PSF can be modeled by a 2-diminsional Gaussian,

or even as in our case by a "triangular" shaped filter of the form

0.25 0.50 0.25

0.50 1.0 0.50

0.25 0.50 0.25

There is some freedom in the choice of the backprojection operator. We achieved best results with using the same filter kernel for the reconstruction.

The following image was down sampled by a factor of 2. The camera position

is known. User can click the image to position the camera.

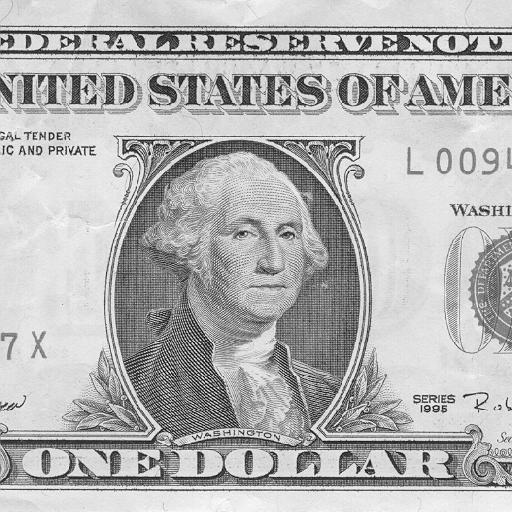

Original image, this is George. A single frame captured by the camera.

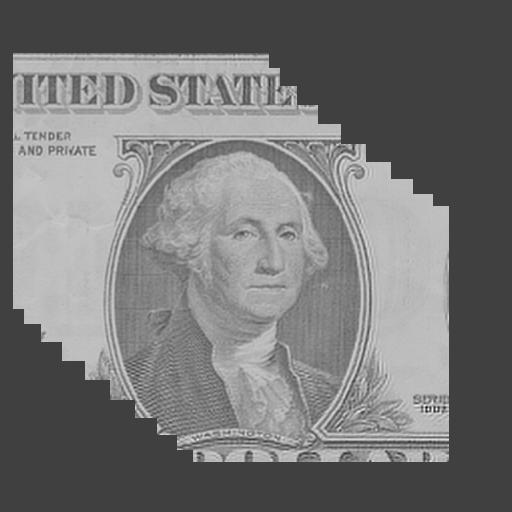

Reconstructed image after one iteration.

Reconstructed image after 10 iterations.

We simulate the movement of the camera in 3D space. Images are captured

from very different viewpoints. The various frames show geometric distorsion

due to perspective warp. In the actual implementation, the camera is fixed

at the origin and the surface of an object is moved relative to the camera.

To simulate the camera we implemented a 3D-graphics pipeline.

The purpose of this demonstration is to show how 3D objects can be efficiently

displayed, even in real time, given natural bandwidth and latency limitations.

The idea is to transmit only a "low resolution" data set, for example over

a limited network connection, and perform Super resolution "in place".

This allows for drastic reduction in the amount of data that needs to be

transmitted and makes use of the often "idle" processing capacity on the

(Display) front end. If sufficient information of the camera position is

transmitted, images can be almost perfectly reconstructed. This is especially

useful for images of fine detail, textures, and for interactive applications,

when latency and bandwidth is an issue.

Original Image

Camera view

After one iteration.

After 5 iterations

Given a set of images of unknown origin, meaning that the viewpoint and perspective of the image sensor is not known or imprecise, the reconstruction algorithm has to start with image registration. Image Registration is the process of finding the relative orientation of each image in the set to a reference image or coordinate system. In order to solve the registration problem (in general), motion estimation is required. Several methods for Image Registration have been developed.

Well registered images will allow for resolution enhancement similar to the special cases demonstrated above. Not sufficiently well registered images will introduce additional blurr and naturally lead to inferior results. The Superresolution algorithm may fail to converge.

Below are three images shown that were scanned with low resolution in

different orientation.

We register the images and use the registration parameters (in this

case we have only rotation and translation)

One can apply the resolution enhancement algorithm to the

After Registration.

After reconstruction