Class Project

EE362/PSYCH221: Applied Vision and Imaging Systems (Winter Quarter 2005-2006)

Title

Image-Based Rendering using Disparity Compensated Interpolation

Submitted by

Aditya Mavlankar

Introduction:

Virtual view synthesis refers to the generation

of a view of a scene from an arbitrary or novel view-point. Image-based

rendering (IBR) techniques generate a novel view from a set of available images

or key views. Unlike traditional three-dimensional (3-D) computer graphics, in

which 3-D geometry of the scene is known, IBR techniques render novel views

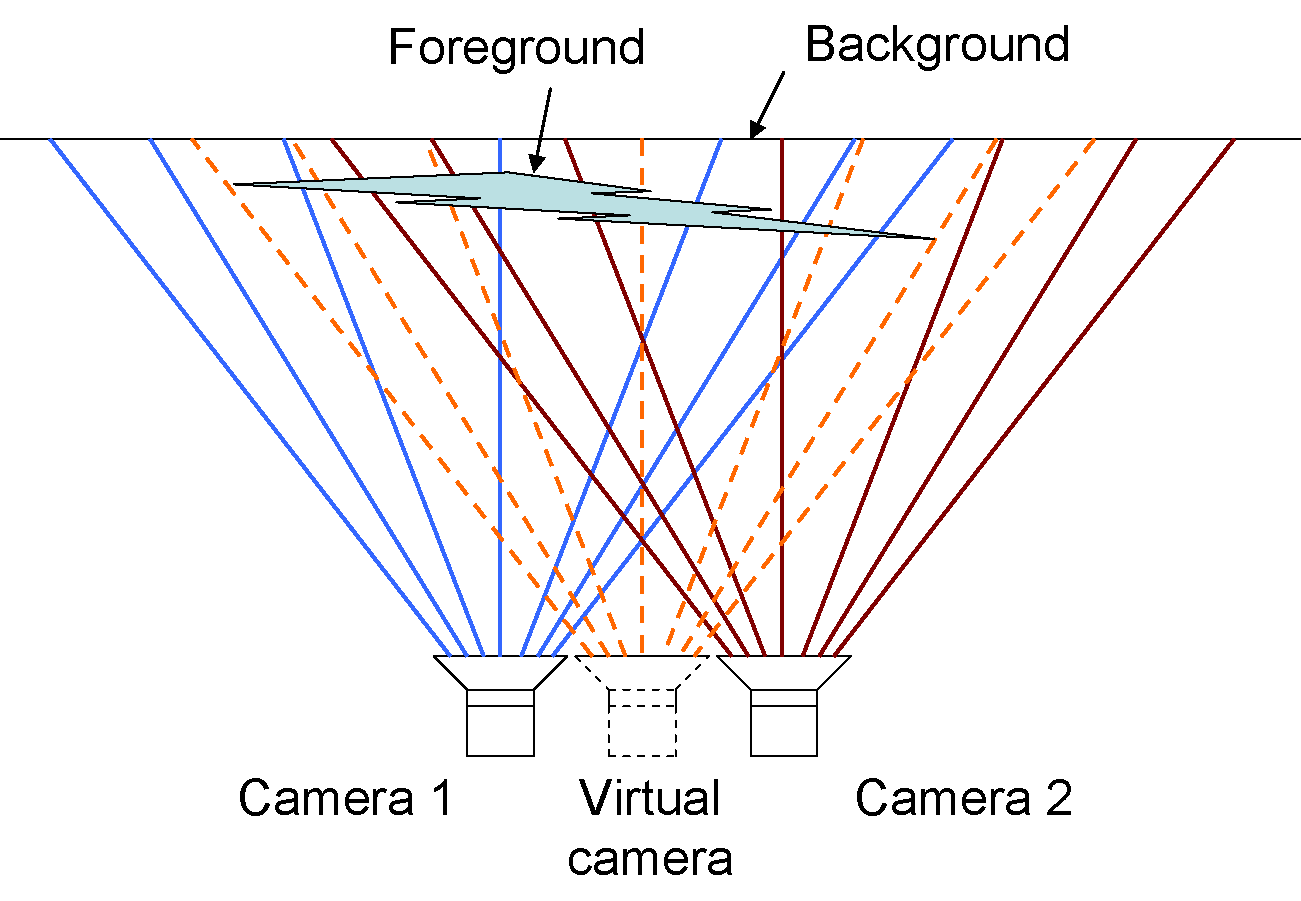

directly from input images. Figure 1 illustrates this idea. Camera number 1 and

camera number 2 are real cameras that capture the same scene from different

view-points. Also shown is a virtual camera placed at a view-point which is

between the two real cameras. The goal is to render a novel view observed by

this virtual camera.

Figure 1: Virtual view synthesis.

A survey of IBR techniques is presented in [1].

This survey classifies IBR techniques into three categories according to how

much geometric information is used:

1) Rendering with explicit geometry

(either with approximate or accurate geometry).

2) Rendering with implicit geometry

(i.e., correspondence).

3) Rendering without geometry.

Rendering with explicit geometry:

This category is represented by techniques such

as 3-D warping, layered depth image (LDI) rendering, and view-dependent texture

mapping. 3-D warping [2] assumes that the depth information is available for

every point in one or more images. LDI [3] is an improvement over 3-D warping

since it treats the disocclusion artifacts in 3-D

warping. LDI, however, assumes the knowledge of what is behind the visible

surface. Texture maps can be generated by applying computer vision techniques

to captured images. View-dependent texture mapping [4] blends the textures from

different view-points after warping them all first to a common surface.

Rendering with implicit geometry:

View interpolation [5] and view morphing [6]

are the representatives of this category. Methods in this category rely on

positional correspondences across a small number of images to render new views.

The positional correspondences are typically generated from a sparse set of

correspondences (matching points) input by the user. Although geometry is not

directly available, 3-D information is computed using the usual projection

calculations.

Rendering without geometry:

Light field rendering [7] and lumigraph systems [8] are the main techniques in this

category. These techniques do not rely on any geometric information, but they

rely on oversampling to counter undesirable aliasing

effects in output display.

Goal:

The purpose of this project is to come up with

a rendering technique which requires no depth information, no correspondence

input and works well when the disparity between two views captured by two

adjacent cameras is not too high, i.e., the two key images depict the same

objects from slightly different view-points. Also it would be good if the

computational complexity is bounded by the image resolution (spatial size of

the image), rather than the scene complexity. The view-point for the novel view

can be anywhere on the line joining the two camera centers.

The IBR technique would then be used to

generate a video of view-point traversal in a static natural scene. The effect

of inserting novel views on the viewing experience would be observed. How many

intermediate views are required for a smooth traversal? How sensitive are we to

the quality of these intermediate novel views? Can we tolerate the artifacts in

the novel views produced by our proposed algorithm?

(One famous example of view-point traversal is

from the

References:

[1] H.-Y. Shum, S. B. Kang and S. -C. Chan,

"Survey of image-based representations and compression techniques," IEEE

Transactions on Circuits and Systems for Video Technology, Vol. 13, No. 11, pp

1020-1037, Nov. 2003.

[2] L. McMillan, "An image-based

approach to three-dimensional computer graphics," Ph.D. dissertation, Dept. Comput. Sci., Univ.

[3] J. Schade,

[4] P. E. Debevec, C.

J. Taylor and J. Malik, "Modeling and rendering

architecture from photographs: A hybrid geometry- and image-based approach,"

Proc. ACM Annual Computer Graphics Conf., pp. 11-20, Aug. 1996.

[5] S. Chen and L. Williams, "View

interpolation for image synthesis," Proc. ACM Annual Computer Graphics Conf.,

pp. 279-288, Aug. 1993.

[6] S. M. Seitz and C. M. Dyer, "View

morphing," Proc. ACM Annual Computer Graphics Conf., pp. 21-30, New Orleans,

LA, Aug. 1996.

[7] M. Levoy and P. Hanrahan, "Light field rendering," Proc. ACM Annual

Computer Graphics Conf., pp. 31-42, New Orleans, LA, Aug. 1996.

[8] S. J. Gortler, R.

Grzeszczuk, R. Szeliski,

and M. F. Cohen, "The lumigraph," Proc. ACM Annual

Computer Graphics Conf., pp. 43-54,