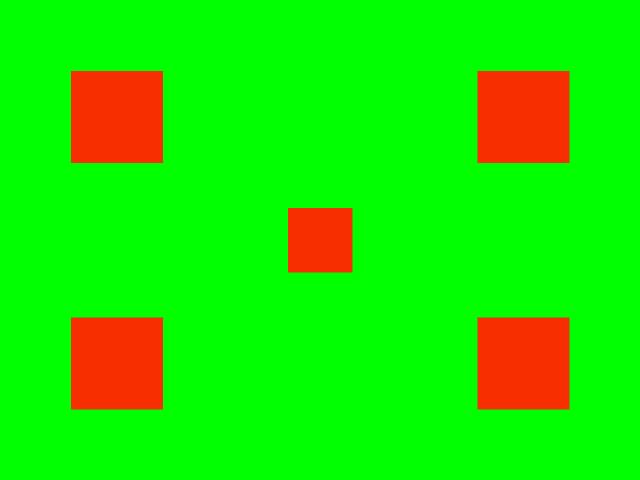

they are compared to the original images. This comparison is done by calculating the

difference between the original image target colors and the final image target colors.

This calculation is done with the CompareImagesCenterYes.m

script. The target colors from the final images are obtained by averaging together the

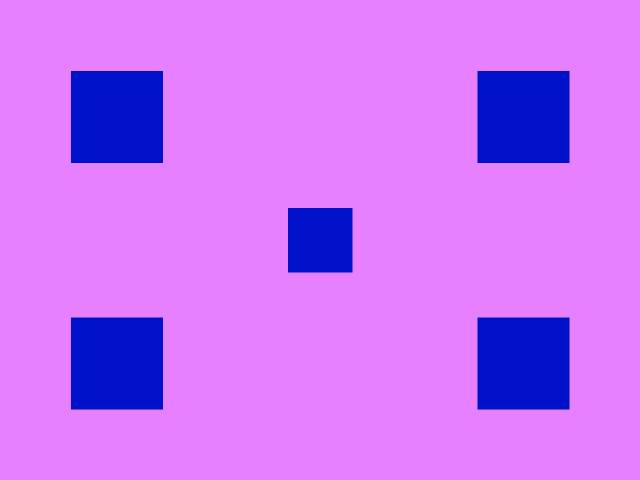

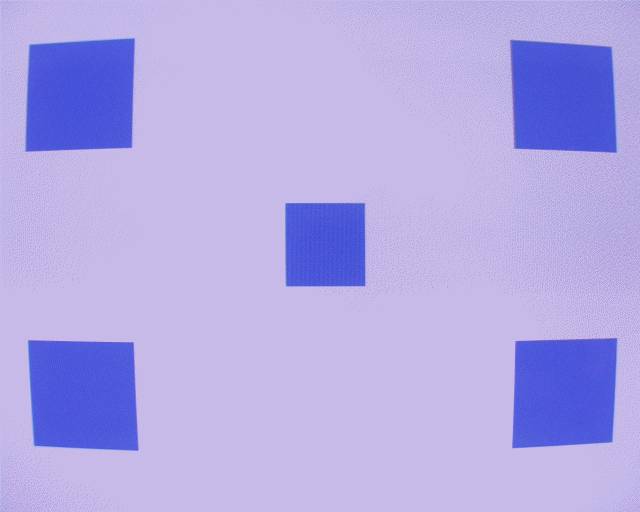

pixels in the four corner squares as shown in the picture to the right. These differences

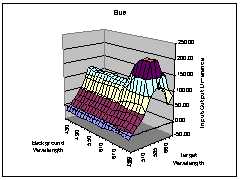

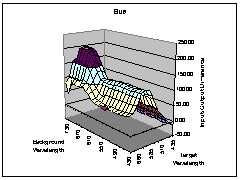

are then plotted relative to the original image background colors. This results are shown

in the following plots which describing the camera's color balancing results. This data in also

stored in the centerYesDifferences.mat file.

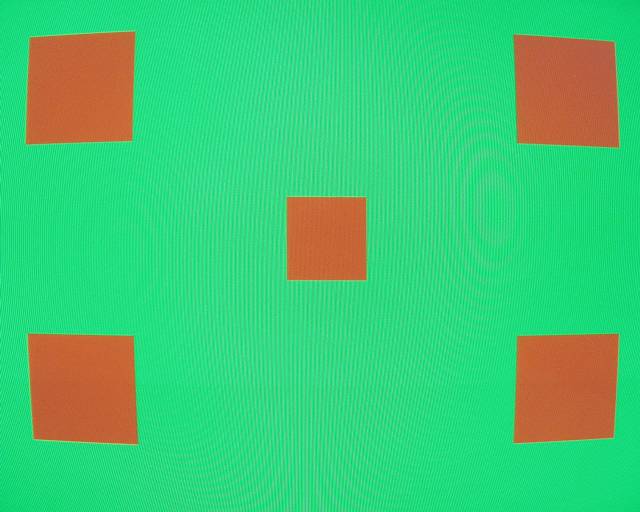

they are compared to the original images. This comparison is done by calculating the

difference between the original image target colors and the final image target colors.

This calculation is done with the CompareImagesCenterYes.m

script. The target colors from the final images are obtained by averaging together the

pixels in the four corner squares as shown in the picture to the right. These differences

are then plotted relative to the original image background colors. This results are shown

in the following plots which describing the camera's color balancing results. This data in also

stored in the centerYesDifferences.mat file.

|

|

|

| Red | Green | Blue |

|

|

|

|

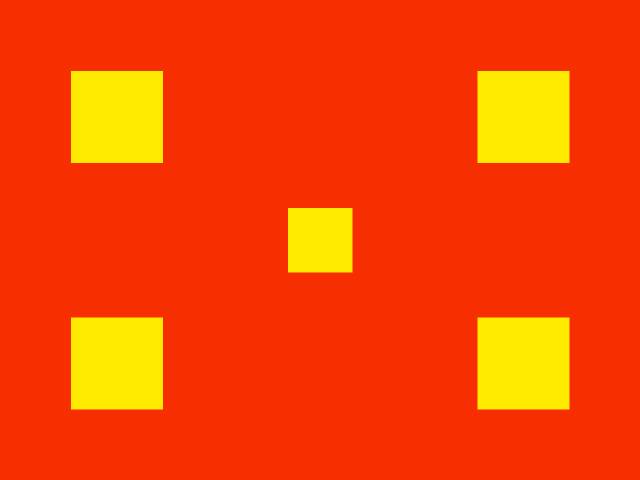

The long background wavelengths cause the Red values to dip by almost -100 and the Blue values to rise by over 200. The Green values, however, remain fairly constant with respect to the changes in the background color. This causes a yellow input target color to change to cyan.

|

|

Camera Algorithm Simulation

- The overall differences of Gray World algorithm and camera outputs are smaller than the

the differences of Perfect Reflector algorithm and camera outputs, which are in [-50, 50] vs.

[-170, 100].

- As human's intuition and the output data of two Stanford Tower images, the output images of

Gray World algorithm are closer to the output images of the camera.

Future Work

The project can be extended in the following respects:

- Evaluate other color balancing algorithms, such as color correlation.

- Simulate camera color balancing algorithms with combination of two or more algorithms, or with some optimization.

trek@alumni.stanford.edu lihui@leland.stanford.edu