|

|

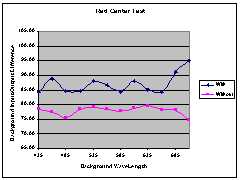

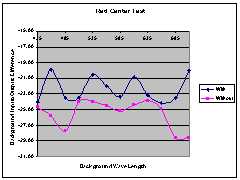

| With Center | Without Center |

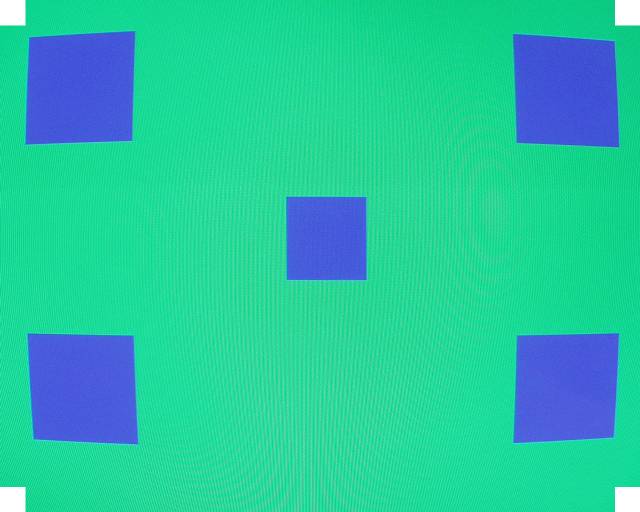

|

|

|

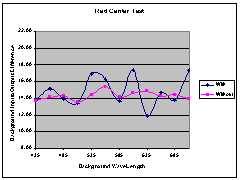

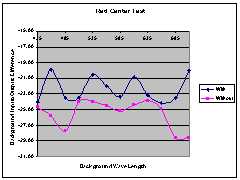

| Red Color Test | Green Color Test | Blue Color Test |

|

|

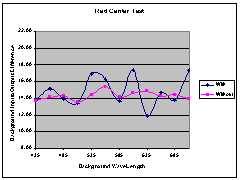

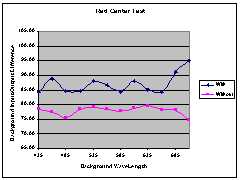

| With Center | Without Center |

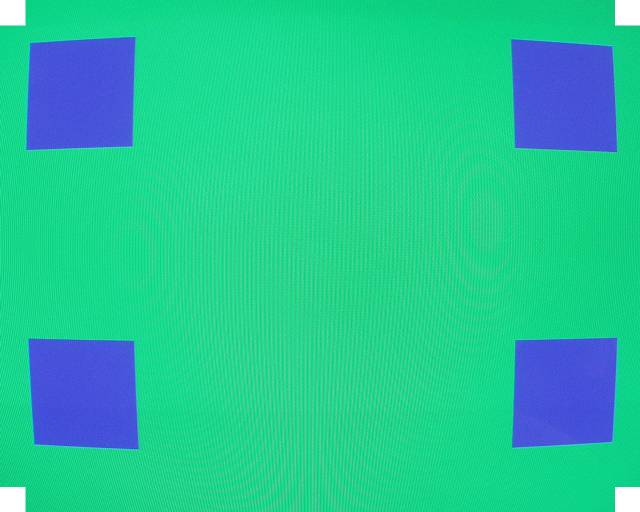

|

|

|

| Red Color Test | Green Color Test | Blue Color Test |